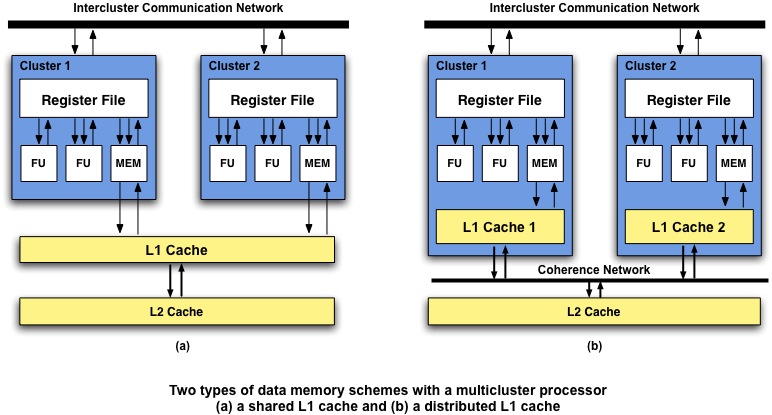

The datapath is the portion of the processor that carries data, such as the function units, the register files, and various multiplexors. The datapath is a good candidate for customization because it is typically over-designed. For example, a centralised register file quickly becomes a bottleneck with increasing number of FUs. A decentralized design, with "clusters" of FUs having a local register file is a viable alternative which scales well with increasing number of FUs. While most previous work in clustered datapath designs have used a shared data cache model, a decentralized cache can have similar scalability benefits. However, the use of distributed caches can significantly change the issues a compiler must consider when partitioning code.

We have developed techniques and algorithms to schedule effectively for such irregular architectures. Region based Hierarchical Operation Partitioning (RHOP) has been developed to handle clustered architectures and Profile-guided Data Access Partitioning has been developed to distribute the data across caches.

RHOP

A single monolithic register file is a major component of cost and delay of the datapath. With increasing number of FUs, the porting requirements cause the total cost of the register file to grow quadratically with repect to the number of FUs. A decentralized register file is sometimes a better approach. With a decentralized register file, FUs are organized into clusters. Each cluster has a local register file accessible only to FUs within that cluster. Communication across clusters happens via a longer latency interconnection network. The following picture shows a clustered datapath.

Multi-clustered VLIW processors rely heavily on the compiler to efficiently divide up computation across each cluster so that they are evenly load balanced and require minimal communication. Communication on the critical path would likely lengthen the schedule, and load balancing effectively keeps all units in the system as busy as possible. This partitioning problem is similar to parallelization of multiprocessor workloads, but at a much finer-grained granularity. Our process for partitioning is the Region-based Hierarchical Operation Partitioning algorithm, or RHOP. RHOP annotates the dataflow graph with node weights and edge weights which estimate the load balancing impact and communication overhead, respectively. These are then used in a multilevel graph partitioning algorithm to estimate the overall schedule length and design an efficient partition for the code.

A multilevel algorithm coarsens highly related nodes together and places them into partitions. The nodes within the graph are continually coarsened by grouping pairs of nodes together. At each level of coarsening, a snapshot of the currently coarsened nodes is taken. When the number of coarse nodes reaches the number of desired partitions, coarsening stops. The coarse nodes are then assigned to different clusters, and the uncoarsening process begins. During uncoarsening, the algorithm backtracks across the earlier snapshots of coarse nodes, considering moving operations at each stage. A refinement algorithm is used to decide the benefits of moving a node from one cluster to another in order to improve the partition.

The refinement process traverses back through the coarsening stages, making improvements to the initial partition. At each uncoarsening stage, the coarsened nodes available at that point are considered for movement to another cluster. In order to properly improve the partition of the operations in the graph, the algorithm must have metrics for deciding which cluster to move from, the desirability of the current partition, and the benefits of an individual move. These metrics take into account the load on each cluster as well as the cuts in edges of the dataflow graph.

The graph below compares the performance of a 4-cluster machine using BUG (a popular greedy clustering algorithm) and RHOP with a single cluster machine containing the sum of the resources of all the clusters. The single cluster machine provides an upper-bound of performance. BUG only achieves 68% of the upper-bound due to the local, greedy heuristics breaking down for wide machines. RHOP increases performance to 79% of the upper bound.

Profile-guided Data Access Partitioning

Distributing the memory subsystem can further improve the scalability of large processor designs for many of the same benefits as decentralizing the register file. Each PE can have a smaller, faster data memory associated with it, thus enabling a more efficient processor design. While the use of a distributed data memorycan benefit scalability, it also presents many new challenges that can negatively affect performance. First, with a distributed memory, each PE would generally have a smaller amount of total memory associated with it than it would with a shared memory. This makes placement of memory operations more critical, as overcommitting a memory could significantly increase the number of cache misses. Second, using a distributed memory requires the use of a coherence protocol for correct execution. The overhead of the coherence protocol and congestion in the network could potentially decrease performance. Finally, the caches could be replicating data across the PEs, which could waste space in the memory subsystem.

Code generation for distributed data memory requires the compiler to carefully examine the data access patterns of each individual memory operation. Analysis of the memory accesses of each operation can help to determine when individual data accesses are causing others to either hit or miss in the cache. In addition, the compiler can estimate the contribution each memory operation has to the overall working set. Placing too many operations in a single cache could potentially increase the number of cache misses. Thus, given profile information about affinities between operations and working set sizes, the compiler can proactively combine or split operations across the distributed data caches in order to improve performance.

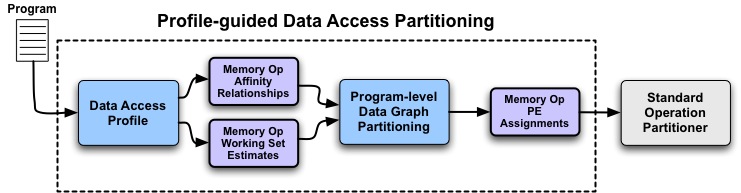

Our technique for data access partitioning includes three steps. The dotted box indicates the main parts of our profile-guided approach. During the first step, a profile of the data accesses is performed which determines affinities that memory operations have with one another. We use a pseudo-cache simulation in order to determine whether any pair of operations would prefer to occur in the same cache or be kept in separate caches. The second step builds a program-level data access graph of all the memory operations, using the statistics gathered during the profile. This graph is then partitioned to determine the memory operation placement across the PEs. In the third step, a standard operation partitioner is used to produce a block-level partitioning of the remainder of the program, cognizant of the preplaced memory operations.

Relevant Publications

- None.

Page last modified January 22, 2016.